The complexity of deploying a Kubernetes cluster

Where can we deploy Kubernetes clusters? Can we run it on VMs in our data center? On Cloud VMs? What about if we don't want to manage the cluster at all?

It's safe to say that everything related to Kubernetes can be (quite) overwhelming. At least in the beginning, when you are learning about it. However, it can be overwhelming later in the process as well. With all the stuff you need to take care of, upgrades, deprecations, backward compatibility, etc.

The reason for this is simple - it is a technology that is evolving quickly, and everyone wants, and needs, to keep up. In other words, it became a trend. It seems that everyone is doing it, even though not everyone needs it.

Let's return now to the vastness of the Kubernetes ecosystem. This vastness is present in its learning material, as well as in deployment options. In this article, we'll focus on the latter - all the places where you can run Kubernetes clusters. With it, I hope I will present Kubernetes in a bit more tolerable way, and try to answer some of the questions from above.

Just a note before we start - I am going to use the term deployment rather lightly in this article. It will encompass the installation, configuration, and operation of a Kubernetes cluster.

Types of deployments

Even though there are many ways to deploy it, we can group all of them into four types:

- On-premise deployment.

- Cloud-managed deployment.

- Hybrid deployment.

- Platform deployment.

On-premise deployment

Risking not to state the obvious - this option takes into account that you (and hopefully your team) install and manage VMs on which you will then install and manage the Kubernetes cluster. Due to the complexity of the installation, usually you will not go component by component and install each one of them, instead, you will use a deployment tool for that.

There are different tools for this (of course there are). For brevity, we will mention only some of them, that are being used in the wilderness.

Kubeadm is the first one. This is the officially supported tool for deploying Kubernetes. It provides a best-practice "fast path" to get you started with the Kubernetes cluster. It assumes that the VMs where you want to run the Kubernetes cluster are available and configured properly. Official documentation for the installation of a self-managed Kubernetes cluster evolves around this tool.

Next up is the K3s. It is a lightweight distribution of Kubernetes that is suitable for edge devices, slower and weaker computers, servers or VMs. Provided by Rancher, it is a great solution if you are opting for a simple, easy, and lightweight deployment.

The third up is Kind. It is a tool meant for running local Kubernetes clusters, using Docker containers as nodes. It was primarily developed to test the Kubernetes cluster, but can be used for development and CI purposes. It is not recommended for production purposes.

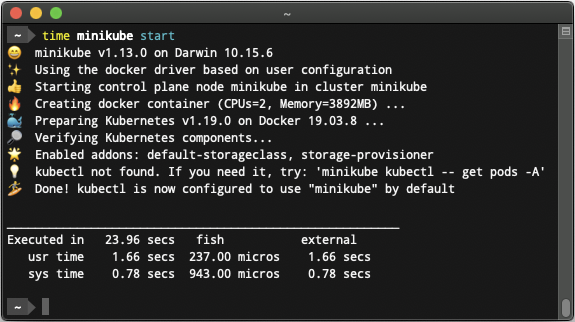

Another great tool meant to be used for development and/or CI is Minikube. It quickly sets your local cluster, and it provides ease of deployment and management. As mentioned in the sentence before - it is not recommended for production purposes.

Last but not least - K3d. Another tool great for development and CI purposes, but not for production. Provided by the community, it runs as a wrapper for K3s and helps you test your applications before deploying them to K3s.

Not complex enough? Wait for it.

Cloud-managed Deployment

If you do not want to go through different stages of headaches, despair, and self-doubt, you will opt for this type of deployment. Just kidding, the first option is good if you want to have everything your way, learn more, and set everything from scratch.

This option includes setting up and deploying a Kubernetes cluster as a service, provided by different Cloud Providers. This is good if you want to have everything already set and pre-configured.

It has also some downsides - you cannot interact with some or all control plane components (e.g. etcd, api-server, etc), you depend on the provider of the service to support the latest Kubernetes version, some options are not as simple as they would be if you are running your own Kubernetes cluster.

Here, I'll mention only three services, that are somewhat the same but are offered by different Cloud Providers. Those services are, in alphabetical order, Azure Kubernetes Service or AKS, Elastic Kubernetes Service or EKS, and Google Kubernetes Engine or GKE.

Each of them has different deployment options and possible configurations, but in essence, are the same - Kubernetes clusters are provided by the Cloud Provider(s), and you don't need to set up and configure those clusters or trouble yourself with managing control plane components. All that is done by the Cloud Provider. You just need to concentrate on running your applications there and possibly add some other components to the Kubernetes cluster.

You get a fully functioning and operational, production-grade, Kubernetes cluster for an adequate price that varies by the Cloud Provider. Which option to choose depends mainly on the desired Cloud Provider, if you are already running something on a specific provider, and the price.

This is where the complexity begins.

Hybrid deployment

If you are more into hybrid stuff - you already have your own Data Center, and just want to install Kubernetes there, and plug it into some Cloud Provider or Platform - this option is for you. It allows you to install and configure Kubernetes cluster with ease on existing VMs, servers, and connect them with your platform, or connect it to the Cloud Provider.

RKE is one of those tools. It stands for Rancher Kubernetes Engine, and it is suitable for hybrid environments - it is a CNCF-certified Kubernetes distribution that runs entirely within Docker containers. It solves the common frustration of installation complexity with Kubernetes by removing most host dependencies and presenting a stable path for deployment, upgrades, and rollbacks.

The second one is EKS Anywhere. It is an open-source deployment option for Amazon EKS that allows you to create and operate Kubernetes clusters on-premises, with optional support offered by AWS. EKS Anywhere supports Bare Metal, CloudStack and VMware vSphere as deployment targets.

This is where we add more to the complexity.

Platform deployment

The option that offers you the most "bang for your buck" - the "ease" of deployment, configuration, and management is the Kubernetes Management Platform. This solution is good if you are running everything on Kubernetes, you have multiple different Kubernetes Clusters, both on-prem and cloud, and want a central place where you can manage, configure, access, provision, and deploy everything.

The biggest "players" in the Kubernetes Management Platform domain are - Red Hat Openshift, SUSE Rancher, VMWare Tanzu, and Google Anthos.

Not wishing to go deep dive into what each of these platforms offer, have and don't have, pros and cons of one against all others, I want to mention one thing. In a nutshell, they all offer the same - simplified cluster operations, consistent security policy and user management, and access to shared tools and services.

If you want all that, go ahead and evaluate one of the Platforms mentioned above, and good luck!

I hope this is where we are finished with all this complexity.

Summary

The decision to use or not to use Kubernetes can be hard in the beginning. Especially when we are not aware of other options and opportunities. Maybe we don't even need a Kubernetes cluster or multiple clusters, maybe there is a simpler and easier solution.

If in the end, you choose the path of the Kubernetes ecosystem, I hope this article will be there to give you a bigger picture and help you understand different ways to deploy the Kubernetes cluster in your environment.

Is there some other option I haven't taken into consideration? Do the types I'm mentioning have sense? Write down in the comments your take on this, I'm eager to know your view and learn more on the topic.

For more Kubernetes-related articles, subscribe to my newsletter.